HKU Business School-Led Research Team Uses Mobile Phone Data to Understand How Family Social Networks Respond to Disasters

An international research team led by Dr. Jayson Jia, Associate Professor of Marketing at HKU Business School, investigated how family social networks respond to the shock of a sudden natural disaster. This research has just been published in Nature Communications, a leading science journal.

The ability of people to marshal support from their social networks in the face of setbacks is a critical survival mechanism. However, there is very little large-scale empirical data on the social network basis of families’ response to risk and disaster.

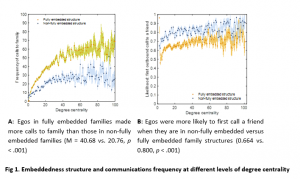

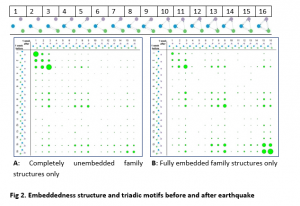

Using anonymised individual-level mobile phone data, the research studied how 35,565 earthquake victims, who were all members of family plans, communicated with family and non-family after a Ms7.0 earthquake. It used the natural experimental shock of the earthquake to show that ‘structure of embeddedness’ (a novel construct and our theoretical contribution) explains post-disaster communications patterns. The research measures real social and communications behaviours, and examined how quickly people make calls after the earthquake (i.e., how quickly they activate their social networks), who they call, how many people they call (size of social network recruited), and how reciprocal the calls are (which is a measurement of relationship importance).

The paper’s primary theoretical contribution is on creating a new structural based measure of embeddedness, which is one of the most important and fundamental concepts in social networks. Embeddedness is the idea that third parties affect the relationship between two people (for example a couple might be closer if they share friends). Previous research has studied embeddedness using simple measures such as counting the number of common friends between people. Dr. Jia’s team developed a new way to measure the structure of how people share social relationships and resources. This change in complexity is like moving from 2-D to 3-D.

Dr. Jia’s team finds that embeddedness structure can also predict many real-world behaviours that traditional measures could not. Earthquake victims in more embedded family structures (more shared social relationships between family members) were more likely to first call other family plan members after an earthquake; these tendencies were stronger at higher earthquake intensity. In the weeks after the event, individuals in more embedded family structures displayed higher levels of intra-family coordination and were able to mobilise more social connections. Overall, a family’s history (and structure) of sharing social relationships, rather than the relationship strength between individual family members, best predicted a family’s social response to the earthquake.

This research also creates new insights on post-disaster communications dynamics and social behaviour during natural disasters. Although social networks are often studied under stable environmental conditions, exogenous shocks such as natural disasters have been the rule rather than exception throughout human history. The regular occurrence of environmental disruption persists today; just in the decade of 2004-2013, 6,525 recorded disasters (3,867 natural) caused more than 1 million deaths and US$1 trillion of economic damage. Although this research examines a disaster that is very different from a pandemic, the research team expects the COVID-19 pandemic to stimulate greater research and policy interest in the social aspect of disasters, and especially how social systems and units remain resilient and recover from disaster.

Jia, J.S., Li, Y., Lu, X. et al. Triadic embeddedness structure in family networks predicts mobile communication response to a sudden natural disaster. Nature Communications 12, 4286 (2021). https://doi.org/10.1038/s41467-021-24606-7

Article free to read at:

https://www.nature.com/articles/s41467-021-24606-7

PDF download at: https://rdcu.be/cogG3